Human Character Mapping

January 1, 2015

Overview

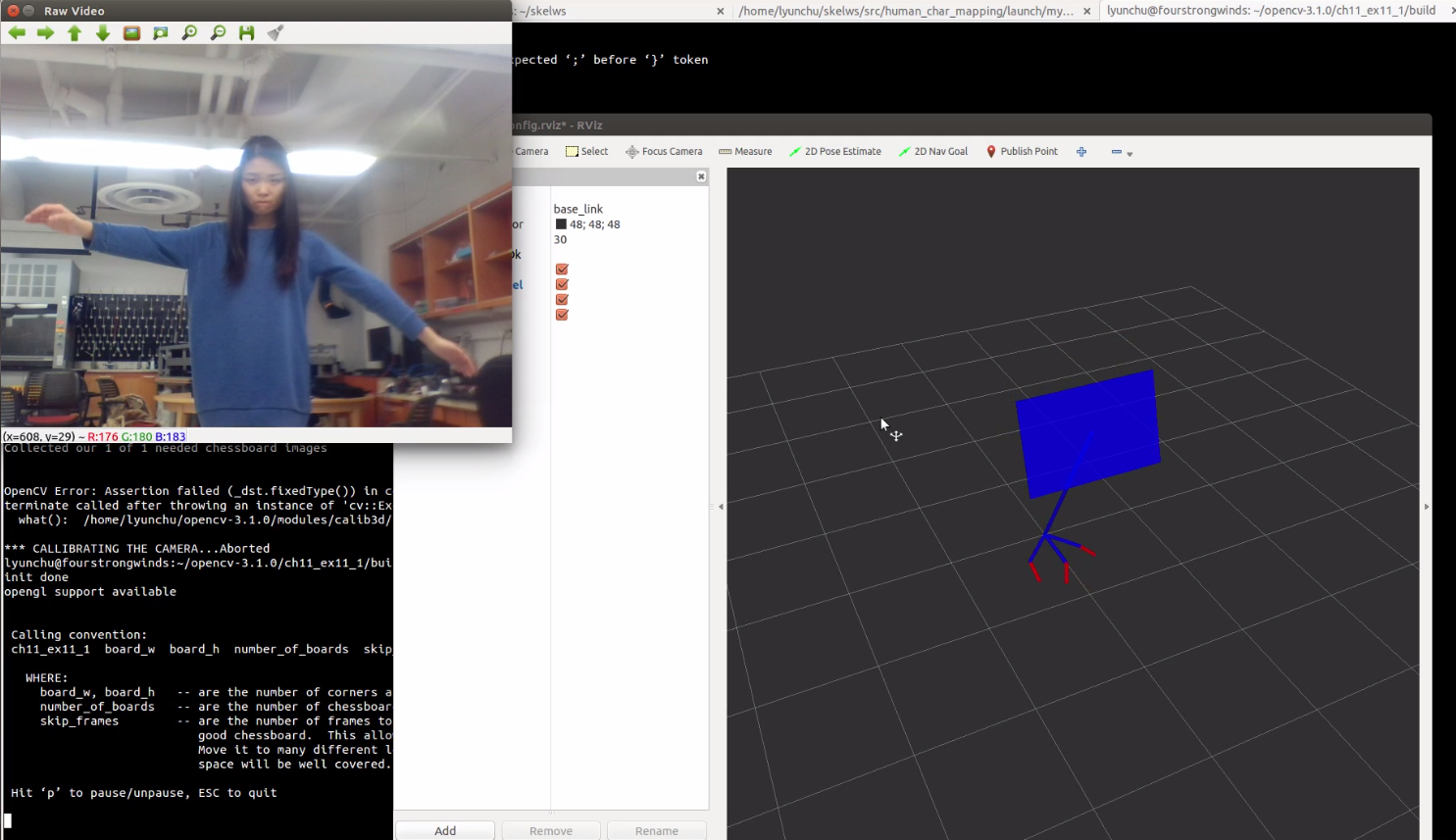

3D computer animation has been developed and used to create blockbuster films and computer games. The goal of this project is to leverage Kinect-based OpenNI skeleton tracking to animate virtual characters in real-time. A music stand was chosen as the virtual charater and has sufficient DOF for exciting 3D animation.

Regarding the techniques used in Computer Animation (CA) to decompose virtual characters, most animation systems are based on a representation of a character’s skeleton. The skeleton is used to compute the positions and orientations of selected mesh of the charater. In this case, the skeleton was written up in URDF and visualized in RViz under ROS.

It is very handy to use Microsoft Kinect to produce physical interface.

Pre-requisites

-

Download OpenNI on this site

-

Install openni_tracker, the main skeleton tracking for ROS

Details

-

The music stand has one spherical joint and seven revolute joints, so in total 10 degrees of freedom. The spherical joint links the body of the music stand and one of its legs.

The URDF of the character model was written in my_robot.urdf. The spherical joint was created by chaining three revolute joints together. Between every two joints, a ‘virtual link’ was placed, which can just has a name without other parameters.

The URDF can be visualized in RViz, the 3D visualization environment for ROS. Setting the value of parameters

guito 1 andself_joint_publisherto 0 by the following command line:roslaunch human_char_mapping myrobot.launch gui:=1 self_joint_publisher:=0A GUI will pop up that allows controlling the values of all the non-fixed joints.

-

Projection of NITE transform data onto the human model.

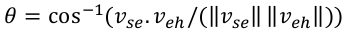

The joint angle of a revolute joint such as the angle of the left elbow was decided by:

where

Vseis the vector from the left shoulder to elbow, andVehis the vector from the left elbow to hand. Using TransformListener, vectors were obtained by callinglookupTransform()fromtarget_frametosource_frameThree Euler angels for the spheical joint were derived from the NITE rotation data between

/right_elbowand/right_shoulderSince the original data from TransformListener is in Quaternion, the Transformations.py module was used for conversion. -

Mapping from human joint angles to character joints is illustrated in the following sketch. The joint connecting Link 3 and link 7 is a spherical joint and the rest are revolute joints.

The real-time mapping was completed in first.py The utility functions can be sourced in utility.py. Once the calibration is completed, typing

roslaunch human_char_mapping myrobot.launchin another terminal, an RViz will pop up and allows the user to visualize the animation.

- The system was developed in ROS using Python on Linux platform. The code can be found in this repository on GitHub.

Future work

- Map all degrees of human model to a human-like character

- Animate mapping (Right now mannually)

- Topology mapping optimization

- Gesture recognition